We had a Skype meeting between me (Oeyvind) and Marije Baalman today, here’s some notes from the conversation:

First, we really need to find alternatives to Skype, the flakyness of connection makes it practically unusable. A service that allows recording the conversation would be nice too.

We talked about a number of perspectives on the project, including Marijes role as an external advisor and commentary, references to other projects that may relate to ours in terms of mapping of signals to musical parameters, possible implementation on embedded devices, and issues relating to the signal flow from analysis to mapping to modulator destination.

Marije mentioned Joel Ryan (

*

*

) as an interesting artist doing live processing of acoustic instruments. His work seems closely related to our earlier work in

T-EMP

. Interesting to see and hear his work with

Evan Parker

. More info on his instruments and mapping setup would be welcome.

We discussed the prospect of working with embedded devices for the crossadaptive project. Marije mentioned the

Bela

project for the BeagleBboard Black, done at Queen Mary University. There are of course a number of nice tthigns about embedded devices, making self-contained instruments for easy of use for musicians. However, we feel that at this stage, our project is of such an experimental nature that is easier explored on a conventional computer. The technical hurdles of adapting it to an embedded device is easier tackled when the signal flow and processing needs are better defined. In relation to this we also discussed the different processes involved in our signal flow: Analysis, Mapping, Destination.

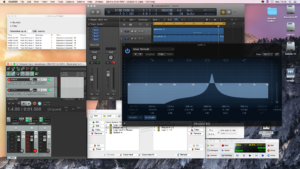

Our current implementation (based on the

2015 DAFx paper

) has this division in analysis, mapping/routing and destination (the effect processing parameter being controlled). The mapping/routing was basically done at the destination in the

first incarnation

of the toolkit. However, lately I’ve started

reworking it to create a more generic mapping stage

, allowing for more freedom in what to control (i.e. controlling any effect processor or DAW parameter). Marije suggested more preprocessing to be done in the analysis stage, to create cleaner and more generically applicable control signals for easier use at the mapping stage. This coincides with our recent experiences in using low level analyses like spectral flux and crest for example; they work well on some instruments (e.g. vocals) as an indicator of balance between tone and noise, however on other instruments (e.g. guitar) they sometimes work well and sometimes less so. Many of the current high level analyses (e.g. in music information retrieval) seems in many respects to be geared towards song recognition, while we need some way of extracting higher level features as continuous signals. The problem being to define and extract what is the

musical meaning

of a signal, on a frame-by-frame basis. This will most probably be different for different instruments, so an source-specific preprocessing stage seems reasonable.

The

Digital Orchestra

and

IDMIL

, and more specifically the

libmapper

might provide inspiration for our mapping module. We make a note of inspecting it more closely. Likewise, the

Live Algorithms for Music

project, and also the possibly the Ircam

OMAX

project. Marije mentioned

3Dmin

project at UDK/TU Berlin using techniques of exploration of arbitrary mappings as a method of

influencing

rather than

directly controlling

a destination parameter. This reminded me of earlier work by

Palle Dahlstedt

, and also, come to think of it our own

modulation matrix

for the Hadron synthesizer.

Later we want Marije to test our toolkit in more depth and comment more specifically on what it lacks and how it can be improved. There are probably some small technical issues in using the toolkit under Linux, but as our main tools are crossplatform (Windows/OSX/Linux), these should be relatively easy to solve.