I’m Iver Jordal and this is my first blog post here. I have studied music technology for approximately two years and computer science for almost five years. During the last 6 months I’ve been working on a specialization project which combines cross-adaptive audio effects and artificial intelligence methods. Øyvind Brandtsegg and Gunnar Tufte were my supervisors.

A significant part of the project has been about developing software that automatically finds interesting mappings (neural networks) from audio features to effect parameters. One thing that the software is capable of is making one sound similar to another sound by means of cross-adaptive audio effects. For example, it can process white noise so it sounds like a drum loop.

Drum loop (target sound):

White noise (input sound to be processed):

Since the software uses algorithms that are based on random processes to achieve its goal, the output varies from run to run. Here are three different output sounds:

These three sounds are basically white noise that have been processed by distortion and low-pass filter. The effect parameters were controlled dynamically in a way that made the output sound like the drum loop (target sound).

This software that I developed is open source, and can be obtained here:

https://github.com/iver56/cross-adaptive-audio

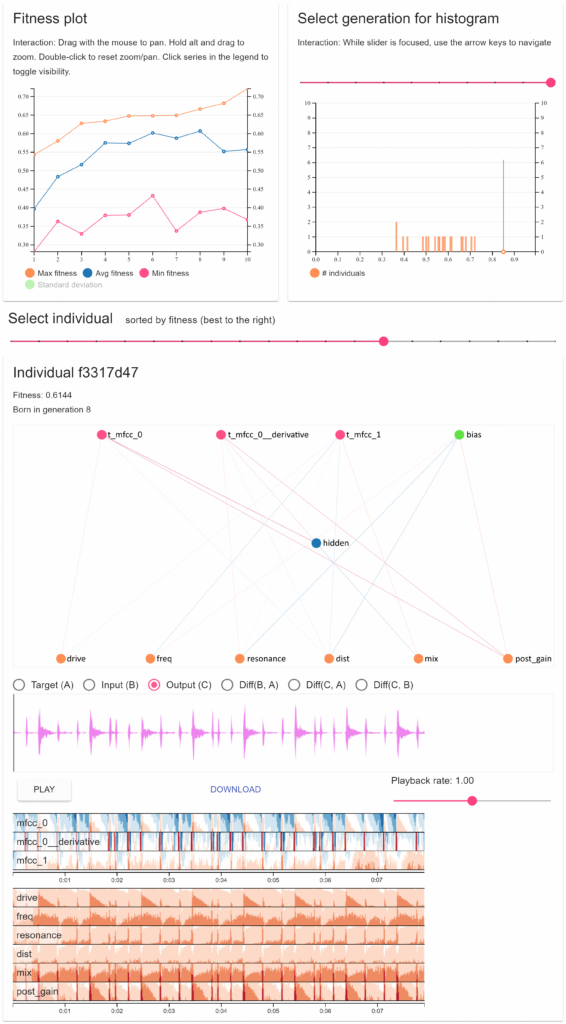

It includes an interactive tool that visualizes output data and lets you listen to the resulting sounds. It looks like this:

For more details about the project and the inner workings of the software, check out the project report:

Evolving Artificial Neural Networks for Cross-adaptive Audio (PDF, 2.5 MB)

Abstract:

Cross-adaptive audio effects have many applications within music technology, including for automatic mixing and live music. The common methods of signal analysis capture the acoustical and mathematical features of the signal well, but struggle to capture the musical meaning. Together with the vast number of possible signal interactions, this makes manual exploration of signal mappings difficult and tedious. This project investigates Artificial Intelligence (AI) methods for finding useful signal interactions in cross-adaptive audio effects. A system for doing signal interaction experiments and evaluating their results has been implemented. Since the system produces lots of output data in various forms, a significant part of the project has been about developing an interactive visualization tool which makes it easier to evaluate results and understand what the system is doing. The overall goal of the system is to make one sound similar to another by applying audio effects. The parameters of the audio effects are controlled dynamically by the features of the other sound. The features are mapped to parameters by using evolved neural networks. NeuroEvolution of Augmenting Topologies (NEAT) is used for evolving neural networks that have the desired behavior. Several ways to measure fitness of a neural network have been developed and tested. Experiments show that a hybrid approach that combines local euclidean distance and Nondominated Sorting Genetic Algorithm II (NSGA-II) works well. In experiments with many features for neural input, Feature Selective NeuroEvolution of Augmenting Topologies (FS-NEAT) yields better results than NEAT.

Hi Iver,

This is fascinating.

I am working on something innovative in this field and would gratly appreciate if you could spare a few minutes of your time to discuss your work over skype or over the phone. Thanks!