First Oslo Session. Documentation of process

18.11.2016

Participants

Gyrid Kaldestad, vocal

Bernt Isak Wærstad, guitar

Bjørnar Habbestad, flute

Observer and Video

Mats Claesson

The Session took place in one of the sound studios at the Norwegian Academy of Music, Oslo , Norway

Gyrid Kaldestad (vocal) and Bernt Isak Wærstad (guitar) had one technical/setup meeting beforehand, and there were numerous emails going back and forth before the session that was about technical issues.

Bjørnar Habbestad (flute) where invited into the session.

The observer, decided to make a video documentation of the session.

I’m glad I did because I think it gives a good insight off the process. And a process it was!

The whole session lasted almost 8 hours and it was not until the very last 30 minutes that playing started.

I am (Mats Claesson) not going to comment on the performative musical side of the session. The only reason for this is that the music making happend at the very end of the session, was very short and it was not recorded so I could evaluate it “in depth” However, just watch the comments, from the participants, at the end of the video. They are very positive…..

I think from the musicians side it was rewarding and highly interesting. I am confident that the next session will generate an musical outcome that is substantial enough to be comment on, from both a performative and a musical side.

In the video there are no processed sound of the very last playing due to use of headphone, but you can listen to excerpts posted below the video.

Here is a link to the video

Reflections on the process given from the perspective of the musicians:

We agreed to make a limited setup to have better control over the processing. Starting with basic sounds and basic processing tools so that we easier could control the system in a musical way. We started with a tuning analysis for each instrument (voice, flute, guitar)

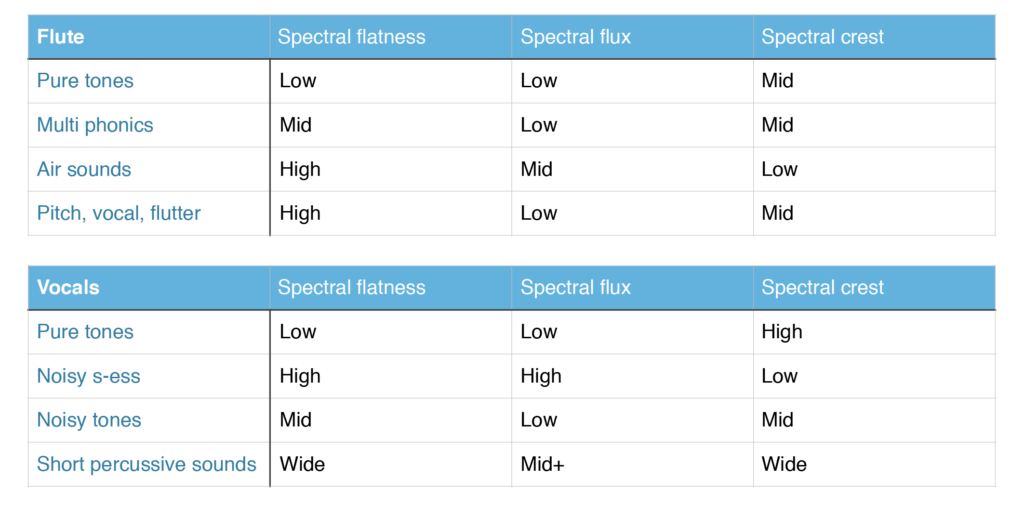

Instead of chosing analysis parameter up front, we analysed different playing techniques, e.g. non- tonal sounds (sss, shhh), multiphonics etc., and saw how the analyser responded. We also recorded short samples of the different techniques that each of us usually play, so that we could investigate the analysis several times.

This is the analysis results we got:

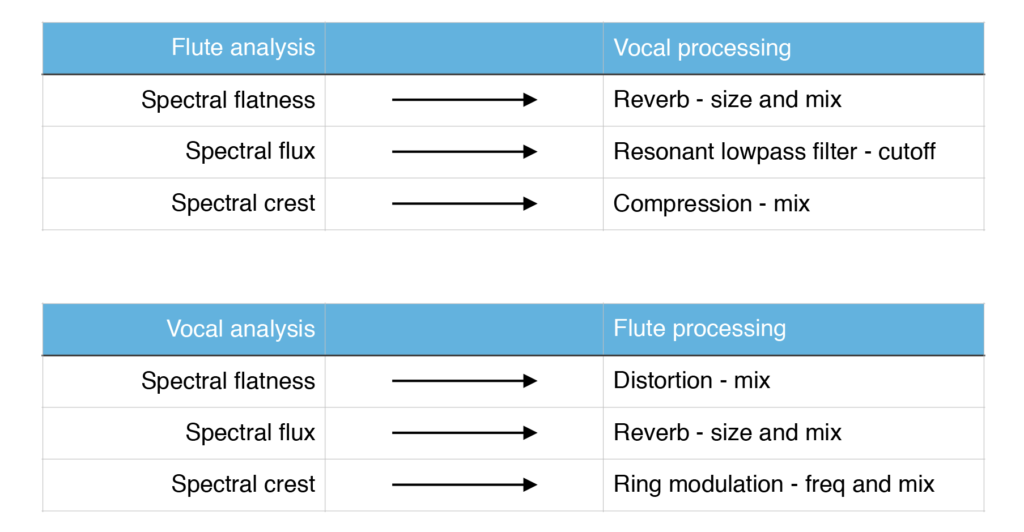

Since we’re all musicians experienced with live processing, we made a setup based on effects that we already know well and use in our live-electronic setup (reverb, filter, compression, ring modulation and distortion).

To set up meaningful mappings, we chose an approach that we entitled “spectral ducking”, where a certain musical feature on one instrument would reduce the same musical feature on the other – e.g. a sustained tonal sound produced by the vocalist, would reduce harmonic musical features of the flute by applying ring modulation. Here is a complete list of the mappings used:

Excerpt #1 – Vocal and flute

Excerpt #2 – Vocal and flute

Excerpt #3 – Vocal and flute

Excerpt #4 – Vocal and flute

Lack of consisive and presise analysis results from the guitar in combination with time limitation, it wasn’t possible to set up mappings for the guitar and flute. We did however test out the guitar and flute in the last minutes of the session, where the guitar simply took the role of the vocal in terms of processing and mapping. A knowledge of the vocal analysis and mapping, made it possible to perform with the same setup even though the input instrument had changed. Some short excerpts from this performance can be heard below.

Excerpt #5 – Guitar and flute

Excerpt #6 – Guitar and flute

Excerpt #7 – Guitar and flute

Reflections and comments:

- We experienced the importance of exploring new tools like this on a known system. Since none of us knew Reaper from before, we used spent quite a lot of time learning a new system (both while preparing and during the session)

- Could the meters analyser be turned the other way around? It is a bit difficult to read sideways.

- It would be nice to be able to record and export control data from the analyser tool that will make it possible to use it later in a synthesis.

- Could it be an idea to have more analyzer sources pr channel? The Keith McMillian Softstep mapping software could possibly be something to look at for inspiration?

- The output is surprisingly musical – maybe this is a result of all the discussions and reflections we did before we did the setup and before we played?

- The outcome is something else than playing with live electronics- it is immediate and you can actually focus on the listening – very liberating from a live electronics point of view!

- The system is merging the different sounds in a very elegant way.

- Knowing that you have an influence on your fellow musicians output forces you to think in new ways when working with live electronics.

- The experience for us is that this is similar to work acoustically.