The first instances of the MIDIator could sum two analysis signals, with separate scaling and sign inversion for each one. Recently, we’ve added two new methods for mixing those two signals, so it warrants this post to explain how it works and which problems it is intended to solve.

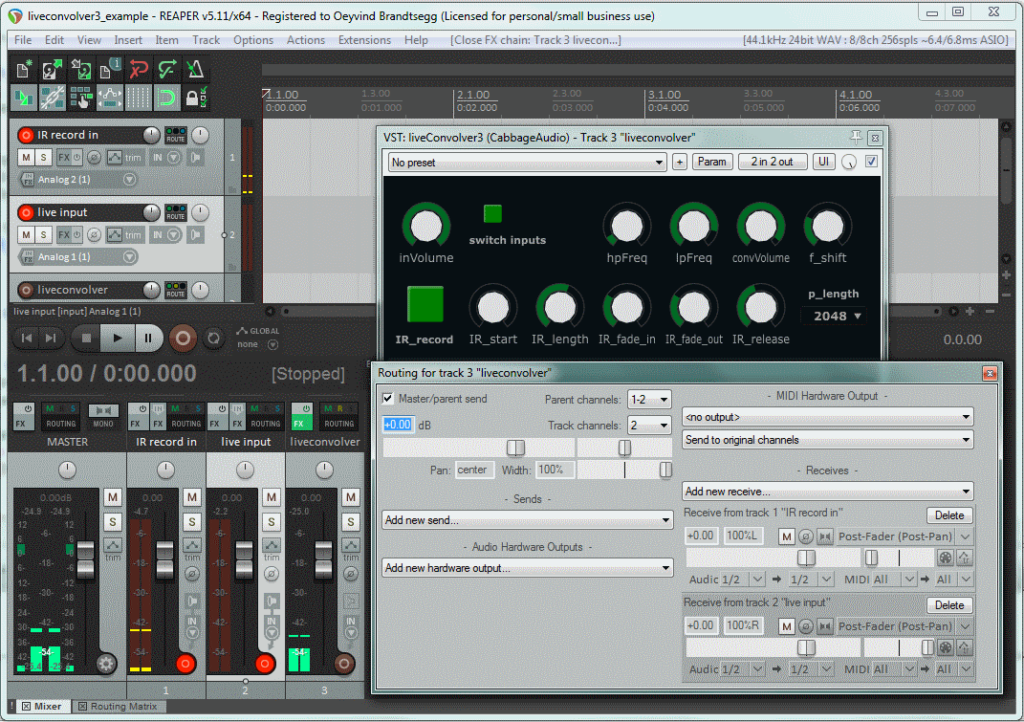

For all mix methods there is a MIDI output configuration to the right. All modules output MIDI controller values. We can set the MIDI channel and controller number, enable/disable the module, and also enter notes about the mapping/use of the module. Notes will be saved with the project.

Do take care however, for your own records, take a screenshot of your settings and save this with your project. The plugins can and will change during the further development of the project. If this leads to changes in the GUI configuration (i.e. the number of user interface elements) changes, there is a high probability that not everything will be recalled correctly. In that case you must reconstruct your settings from the (previously saved) screenshot.

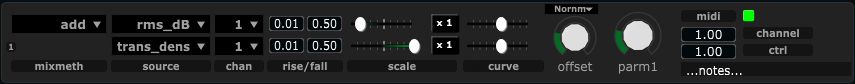

Add

Each of the two signals have separate filtering, with separate setting for the rise and fall times. The two signals are scaled (with scale range being from -1 to + 1), and then added together. If both signals are scaled positively, each of them affects the output positively. If one is scaled negatively and the other positively, more complex interactions between them will form. For example, with rms (amplitude) being scaled negatively while transient density is scaled positively; the output will increase with high transient density, but only if we are not playing loud.

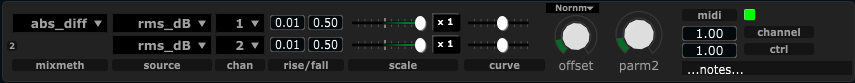

Abs_diff

This somewhat cryptic term refers to the absolute difference between two signals. We can use it to create an interaction model between two signals, where the output goes high only if the two analysis signals are very diffferent . For example, if we analyze amplitude (rms) from two different musicians, the resulting signal will be low as long as they both play in the same dynamic register. If one plays loud while the other plays soft, the output will be high, regardless of which of the two plays loud. It could also of course be applied to two different analysis signals from the same musician, for example the difference between pitch and the spectral centroid.

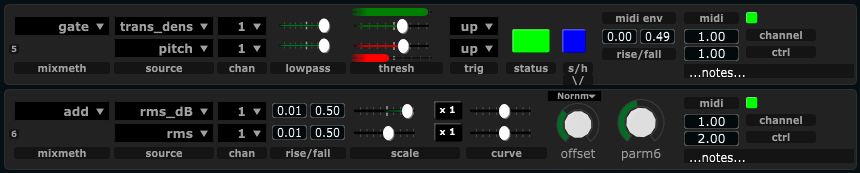

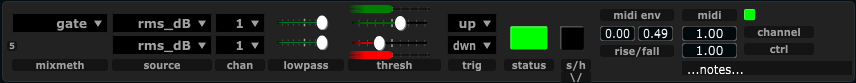

Gate

The gate mixmethod can be used to turn things on or off. It can also be used to enable/disable the processing of another MIDIator module, effectively acting as a sample and hold gate. The two input channels now are used for different purposes: one channel turns the gate on , the other channel turns the trigger off . Each channel has a separate activation threshold (and selection if the signal must pass the threshold moving upwards or downwards to activate). For simple purposes, this can act like a Schmitt trigger , also termed hysteresis in some applications. This can be used to reduce jitter noise in the output, since the activation and deactivation thresholds can be different.

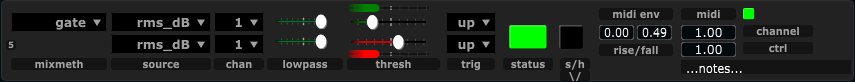

It can also be used to create more untraditional gates. A simple variation let us create a gate that is activated only if the input signal is within a specified band. To do this, the activation threshold must be lower than the deactivation threshold, like this:

The up/down triggers can be adjusted to fine tune how the gate responds to the input signal. For example, looking at the band-activated gate above: If we change the deactivation trigger to “down”, then the gate will only turn off after the signal has been higher than the deactivation threshold and then is moving downwards .

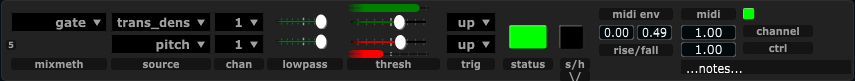

So far, we’ve only looked at examples where the two input signals to the gate is the same signal. Since the two input signals can be diffeerent (even come from two different acoustic source, highly intricate gate behaviour can be constructed. Even though the conception of such signal-interdependent gates can be complex (inventing which signals could interact in a meaningful way), the actual operation of the gate is technically no different. Just for the case of the example, here’s a gate that will turn on if the transient density goes high, then it will turn off when the pitch goes high. To activate the gate again, the transient density must first go low , then high .

Sample and hold:

The gate mixmethod also can affect the operation of another MIDIator module. is currently hardcoded, so that it will only affect the next module (the one right below the gate). This means that, when the gate is on, the next MIDIator module will work as normal, but when the gate is turned off it will retain the value it has reached at the moment the gate is turned off. In traditional signal processing terms: sample and hold . To enable this function, turn on the button labeled “s/h”.