Location: Kjelleren, Olavskvartalet, NTNU, Trondheim

Participants: Maja S. K. Ratkje, Siv Øyunn Kjenstad, Øyvind Brandtsegg, Trond Engum, Andreas Bergsland, Solveig Bøe, Sigurd Saue, Thomas Henriksen

Session objective and focus

Although the trio BRAG RUG has experimented with crossadaptive techniques in rehearsals and concerts during the last year, this was the first dedicated research session on the issues specific to these techniques. The musicians were familiar with the interaction model of live processing, the electronic modifications of the instrumental sound in real time is familiar ground. Notably, the basic concepts of feature extraction and the use of these features as modulators for the effects processing was also familiar ground for the musicians before the session. Still, we opted to start with very basic configurations of features and modulators as a means of warming up. The warm-up can be valid both for the performative attention, the listening mode, and also a way of verifying that the technical setup works as expected.

The session objective and focus is simply put to explore further the crossadaptive interactions in practice. As of now, the technical solutions work well enough to start making music, and the issues that arise during musical practice are many-faceted and we have opted not to put any specific limitations or preconceived directinos on how these explorations should be made.

As preparation for the session we (Oeyvind) had prepared a set of possible configurations (sets of features, mapped to sets of effects controllers). As the musicians was familiar with the concepts and techniques, we expected that a larger set of possible situations to be explored would be necessary. As it turned out, we spent more time investigating the simpler situations, so we did not get to test all of the prepared interaction situations. The extra time spent on the simpler situations was not due to any unforeseen problems, rather that there were interesting issues to explore even in the simpler situations, and that it seemed highly valid to explore those more fully rather than thinly testing a large set of situations. With this in mind, we could easily have continued our explorations for several more days.

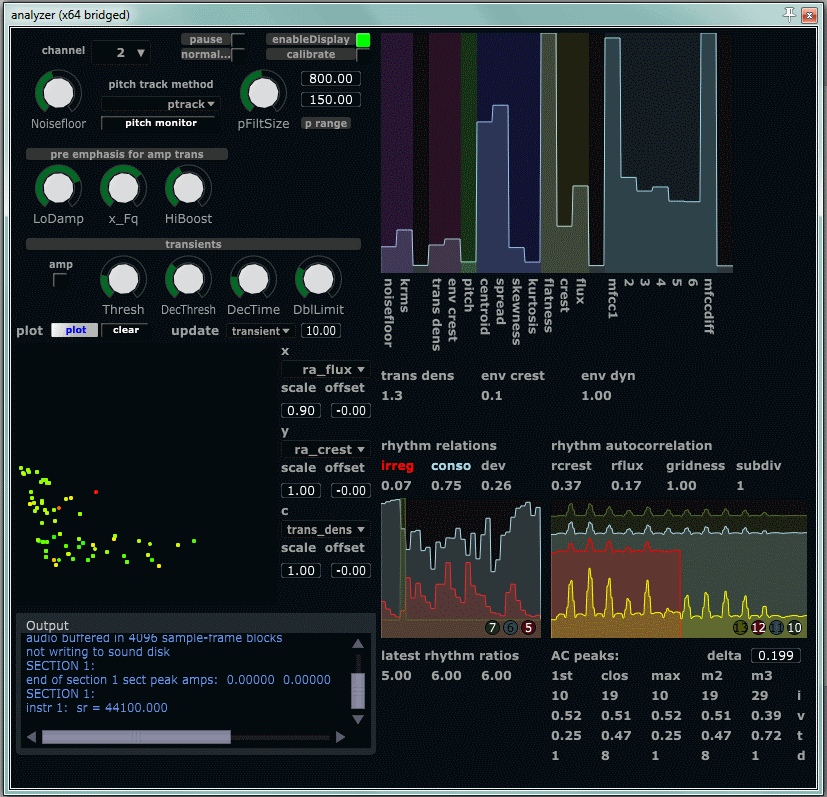

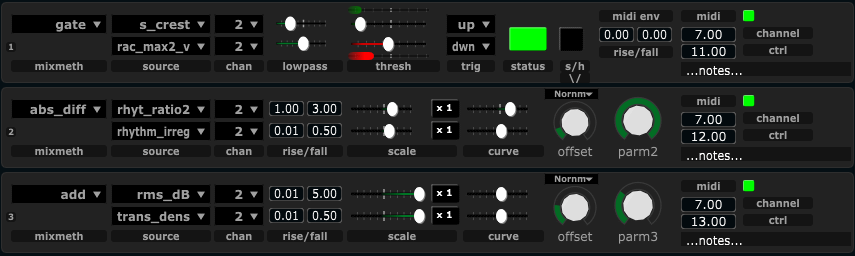

The musician’s previous experience with said techniques provided an effective base for communication around the ideas, issues and problems. The musicians’ ideas were clearly expressed, and easily translated into modifications in the mapping. With regards to the current status of the technical tools, we were quickly able to do modifications to the mappings based on ad hoc suggestions during the sessions. For example changing the types of features (by having a plethora of usable features readily extracted), inverting, scaling, combining different features, gating etc. We did not get around to testing the new modulator mapping of “absolute difference” at all, so we note that as a future experiment. One thing Oeyvind notes as still being difficult is changing the modulation destination. This is presently done by changing midi channels and controller numbers, with no reference to what these controller numbers are mapped to. This also partly relates to the Reaper DAW lacking a single place to look up active modulators. It seems there are no known technologies for our MIDIator to poll the DAW for the names of the modulator destinations (the effect parameter ultimately being controlled). Our MIDIator has a “notes” field that allow us to write any kind of notesassumed to be used for jotting down destination parameter names. One obvious shortcoming of this is that it relies solely on manual (human) updates. If one changes the controller number without updating the notes, there will be a discrepancy, perhaps even more confusing than not having any notes at all.

Takes

- [numbered as take 1] Amplitude (rms_dB) on voice controls reverb time (size) on drums. Amplitude (rms_dB) on drums controls delay feedback on vocals. In both cases, higher amplitude means more (longer, bigger) effect.

Initial comments: When the effect is big on high amplitude, the action is very parallel. The energy flowing in only one direction (intensity-wise). In this particular take, the effects modulation was a bit on/off, not responding to the playing in a nuanced manner. Perhaps the threshold for the reverb was also too low, so it responded too much (even to low amplitude playing). It was a bit uncleer for the performers how much control they had over the modulations.

The use of two time based effects at the same time tends to melt things together – working against the same direction/dimension (when is this wanted unwanted in a musical situation?).

Reflection when listening to take 1 after the session: Although we now have experienced two sessions where the musicians preferred controlling the effects in an inverted manner (not like here in take 1, but like we do later in this session, f.ex. take 3), when listening to the take it feels like a natural extension to the performance sometimes to simply have more effect when the musicians “lean into it” and play loud. Perhaps we could devise a mapping strategy where the fast response of the system is to reduce the effect (e.g. the reverb time), then if the energy (f.ex. the amplitude) remains high over an extended duration (f.ex. longer than 30 seconds), then the effect will increase again. This kind of mapping strategy suggests that we could create quite intricate interaction schemes just with some simple feature extraction (like amplitude envelope), and that this could be used to create intuitive and elaborate musical forms (“form” here defined as the evolution of effects mapping automation over time.).

-

[numbered as take 1.2]

Same mappings as in take 1, with some fine tuning: More direct sound on drums; Less reverb on drums; Shorter release on drums’s amplitude envelope (vocal delay feedback stops sooner when drums goes from loud to soft). More nuanced disposition of vocal amplitude tracking to reverb size.

- [numbered as take 1.3] Inverted mapping, using same features and same effects parameters as in take 1 and 2. Here, louder drums means less feedback of the vocal delay; Similarly, louder vocals gives shorter reverb time on the drums.

There was a consensus amongst all people involved in the session that this inverted mapping is “more interesting”. It also leads to the possibility for some nice timbral gestures; When the sound level goes from loud to quiet, the room opens up and prolongs the small quiet sounds. Even though this can hardly be described as a “natural” situation (in that it is not something we can find in nature, acoustically), it provides a musical tension of opposing forces, and also a musical potential for exploring the spatiality in relation to dynamics.

- [numbered as take 2] Similar to the mapping for take 3, but with a longer release time, meaning the effect will take longer to come back after having been reduced.

The interaction between the musicians, and their intentional playing of the effects control is tangible in this take. One can also hear the drummers’ shouts of approval here and there (0:50, 1:38).

- [numbered as take 3] Changing the features extracted to control the same effects . Still controlling reverb time (size) and delay feedback. Using transient density on the drums to control delay feedback for the vocals, and pitch of the vocals to control reverb size for the drums. Faster drum playing means more delay feedback on vocals. Higher vocals pitch means longer reverberation on the drums.

Maja reacted quite strongly to this mapping, because pitch is a very prominent and defining parameter of the musical statement. Linking it to control some completely other parameter brings something very unorthodox into the musical dialogue. She was very enthusiastic about this opportunity, being challenged to exploit this uncommon link. It is not natural or intuitive at all, thus being hard work for the performer, as one’s intuitive musical response and expression is contradicted directly. She would make an analogy to a “score”, like written scores for experimental music. In the project we have earlier noted that the design of interaction mappings (how features are mapped to effects) can be seen as compositions. It seems we think in the same way about this, even though the terminology is slightly different. For Maja, a composition involves form (over time), while a score to a larger extent can describe a situation. Oeyvind on the other hand uses “composition” to describe any imposed guideline put on the performer, anything that instructs the performer in any way. Regardless of the specifics of terminology, we note that the effect of the pitch-to-reverbsize mapping was effective in creating a radical or unorthodox interaction pattern.

One could crave for also testing out this kind of mapping in the inverted manner, letting transient density on the drums give less feedback, and lower pitches give larger reverbs. At this moment in the session, we decided to spend the last hour on trying something entirely different, just to have also touched upon other kinds of effects.

-

[numbered as take 4] Convolution and resonator effects. We tested a new implementation of the live convolver. Here, the impulse response for the convolution is live sampled, and one can start convolving before the IR recording is complete. An earlier implementation was the subject of another blog post, the current implementation being less prone to clicks during recording and considerably less CPU intensive. The amplitude of the vocals is used to control a gate (Schmitt trigger) that in turn controls the live sampling. When the vocal signal is stronger than the selected threshold, recording starts, and recording continues as long as the vocal signal is loud. The signal to be recorded is the sound of the drums. The vocal signal is used as the convolution input, so any utterance on the vocals gets convolved with the recent recording of the drums.

In this manner, the timbre of the drums is triggered (excited, energized) by the actions of the vocal. Similarly, we have a resonator capturing the timbral character of the vocal and this resonator is triggered/excited/energized by the sound of the drums. Transient density of the drums is run through a gate (Schmitt trigger), similar to the one used on the vocal amplitude. Fast playing on the drums trigger recording of the timbral resonances of the voice. The resonator sampling (one could also say resonator tuning) is a one-shot process triggered each time the drums has been playing slow and then changes to faster playing.

Summing up the mapping: Vocal amplitude triggers IR sampling (using drums as source, applying the effect to the vocals). Drums transient density triggers vocal resonance tuning (applying the effect on the drums).

The musicians liked very well playing with the resonator effect on the drums. It gave a new dimension to taking features from the other instrument and applying it to one’s own. In some way it resembles the “unmoderated” (non-cross-adaptive) improvising together, as in that setting these (these two specific) musicians also commonly borrows material from each other. The convolution process on the other hand seemed a bit more static, like one-shot playback of a sample (although it is not, it is also somewhat similar). The convolution process can also be viewed as getting delay patterns from the drum playing. Somehow, the exact patterns does not differ so much when fed a dense signal, so changes in delay patterns are not so dramatic. This can be exploited with intentionally playing in such a way as to reveal the delay patterns. Still, that is a quite specific and narrow musical situation. Other things one might expect to get from IR sampling is capturing “the full sound” of the other instrument. Perhaps the drums are not that dramatically different/dynamic to release this potential, or perhaps also the drum playing could be adapted to the IR sampling situation, putting more emphasis on different sonic textures (wooden sounds, boomy sounds, metallic sounds, click-y sounds). In any case, for our experiments in this session, we did not seem to release the full expected potential of the convolution technique.

Comments:

Most of the specific comments done for each take above, but some general remarks for the session:

Status of tools:

We seem to have more options (in the tools and toys) than we have time to explore, which is a good indication that we can perhaps relax the technical development and focus more on practical exploration for a while. It also indicates that we could (perhaps should) book practical sessions over several consecutive days, to allow more in-depth exploration.

Making music:

It also seems that we are well on the way to actually making music with these techniques, even though there is still quite a bit of resistance in the techniques. What is good is that we have found

some

situations that work well musically (for example when amplitude inversely affecting room size). Then we can expand and contrast these with new interaction situations where we experience more resistance (for example like we see when using pitch as a controller). We also see that even the successful situations appears static after some time, so the urge to manually control and shape the result (like in live processing) is clearly apparent. We can easily see that this is what we will probably do when playing with these techniques outside the research situation.

Source separation:

During this studio situation, we put the vocals and drums in separate rooms to avoid audio bleed between the instruments. This made for a clean and controlled input signal to the feature extractor, and we were able to better determine the qualitu of the feature extraction. Earlier sessions done with both instruments in the same room gave much more crosstalk/bleed and we would sometimes be unsure if the feature extractor worked correctly. As of now it seems to work reasonably well. Two days after this session, we also demonstrated the techniques on stage with amplification via P.A. This proved (as expected) very difficult due to a high degree of bleed between the different signals. We should consider making a crosstalk-reduction processor. This might be implemented as a simple subtraction of one time-delayed spectrum from the other, introducing additional latency to the audio analysis. Still, the effects processing for audio output need not be affected by the additional latency, only the analysis. Since many of the things we want to control change relatively slowly, the additional latency might be bearable.

Video digest

appropriate to note anyway. During the blog writing about the

appropriate to note anyway. During the blog writing about the