Philosophical and aesthetical perspectives

–report from meeting 16/12 Trondheim/Skype

Andreas Bergsland, Trond Engum, Tone Åse, Simon Emmerson, Øyvind Brandtsegg, Mats Claesson

The performers experiences of control :

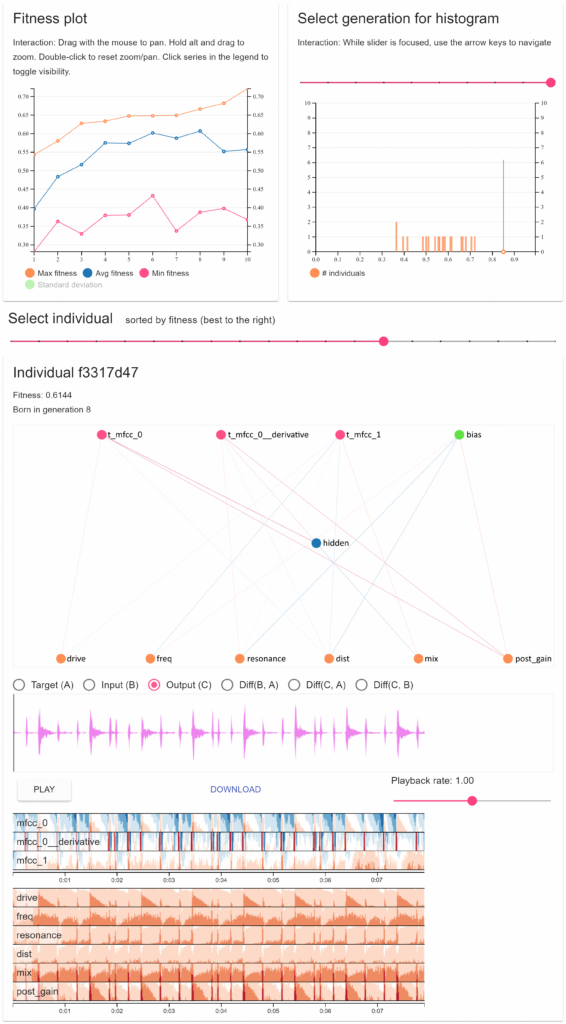

In the last session ( Trondheim December session ) Tone and Carl Haakon (CH) worked with rhythmic regularity and irregularity as parameters in the analysis. They worked with the same kind of analysis, and the same kind of mapping analysis to effect parameter. After first trying the opposite, they ended up with: Regularity= less effect, Irregularity= more. They also included a sample/hold/freeze effect in one of the exercises. Øyvind commented on how Tone in the video stated that she thought it would be hard to play with so little control, but that she experienced that they worked intuitively with this parameter, which he found was an interesting contradiction. Tone also expressed in the video that on the one side she would sometimes hope for some specific musical choices from CH (“I hope he understands”) but on the other hand that she “enjoyed the surprises”. These observations became a springboard for a conversation about a core issue in the project: the relationship between control and surprise, or between controlling and being controlled . We try to point here to the degree of specific and conscious intentional control, as opposed to “what just happens” due to technological, systemic, or accidental reasons. The experience from the Trondheim December session was that the musicians preferred what they experienced as an intuitive connection between input and outcome, and that this facilitated the process in the way that they could “act musically”. (This “intuitive connection” is easily related to Simon’s comment about “making ecological sense” later in this discussion.) Mats commented that in the first Oslo session the performers stated that they felt a similarity to playing with an acoustic instrument. He wondered if this experience had to do with the musicians’ involvement in the system setup, while Trond pointed out that the Trondheim December session and Oslo session were pretty similar in this respect. A further discussion about what “control”, “alienation” and “intuitive playing” can mean in these situations seems appropriate.

Aesthetic and individual variables

This led to a further discussion about how we should be aware that the need for generalising and categorising – which is necessary at some point to actually be able to discuss matters – can lead us to overlook important variable parameters such as:

- Each performer’s background, skills, working methods, aesthetics and preferences

- That styles and genres relate differently to this interplay

A good example of this is Maja’s statement in the Brak/Rug session that she preferred the surprising, disturbing effects, which gave her new energy and ideas. Tone noted that this is very easy to understand when you have heard Maja’s music, and even easier if you know her as an artist and person. And it can be looked upon as a contrast to Tone/CH who seek a more “natural” connection between action and sounding result, in principle they want the technology to enhance what they are already doing. But, as cited above, Tone commented that this is not the whole truth. Surprises are also welcome in the Tone/Carl Haakon collaboration.

Simon underlined, because of these variables, the need to pin down in each session what actually happens , and not necessarily set up dialectical pairs. Øyvind pointed out, on the other hand, the need to lay out possible extremes and oppositions to create some dimensions (and terms) along which language can be used to reflect on the matters at hand.

Analysing or experiencing?

Another individual variable, both as audience and performer, is the need to analyse, to understand what is happening in the perceiving of a performance. One example brought up related to this was Andreas’ experience of his change of audience perspective after he studied ear training. This new knowledge led him to take an analysing perspective, wanting to know what happened in a composition when performed. He also said: “as an audience you want to know things, you analyse”. Simon referred to “the inner game of tennis” as another example: how it is possible to stop appreciating playing tennis because you become too occupied analysing the game – thinking of the previous shot rather than clearing the mind ready for the next. Tone pointed at the individual differences between performers, even within the same genre (like harmonic jazz improvisation) – some are very analytic, also in the moment of performing, while others are not. This also goes for the various groups of audiences, some are analytic, some are not – and there is also most likely a continuum between the analytic and the intuitive musician/audience. Øyvind mentioned experiences from presenting the crossadaptive project to several audiences over the last few months. One of the issues he would usually present is that it can be hard for the audience to follow the crossadaptive transformations, since it is an unfamiliar mode of musical (ex)change. However, responses to some of the simpler examples he then played (e.g. amplitude controlling reverb size and delay feedback), yielded the response that it was not hard to follow. One of the places where this happened was Santa Barbara, where Curtis Roads commented he thought it quite simple and straightforward to follow. Then again, in the following discussion, Roads also conceded that it was simple because the mapping between analysis parameter and modulated effect was known. Most likely it would be much harder to deduce what the connection was just by listening alone, since the connection (mapping) can be anything. Cross Adaptive processing may be a complicated situation, not easy to analyse either for the audience or the performer. Øyvind pointed towards differences in parameters, as we had also discussed collectively: That some were more “natural” than others, like the balance between amplitude and effect, while some are more abstract, like the balance between noise and tone, or regular/irregular rhythms.

Making ecological sense/playing with expectations

Simon pointed out that we have a long history of connections to sound: some connections are musically intuitive because we have used them perhaps for thousands of years, they make ‘ecological’ sense to us. He referred to Eric Clarke’s “ Ways of listening: An ecological approach to the perception of musical meaning (2005)” and William Gaver “ What in the world do we hear?: An ecological approach to auditory event perception ” (1993). We come with expectations towards the world, and one way of making art is playing with those expectations. In modernist thinking there is a tendency to think of all musical parameters as equal – or at least equally organised – which may easily undermine their “ecological validity” – although that need not stop the creation of ‘good music’ in creative hands.

Complexity and connections

So, if the need for conscious analysis and understanding will vary between musicians, is this the same for the experienced connection between input and output? And what about the difference between playing and listening as part of the process, or just listening, either as a musician, musicologist, or an audience member? For Tone and Carl Haakon it seemed like a shared experience that playing with regularity/non- regularity felt intuitive for both – while this was actually hard for Øyvind to believe, because he knew the current weakness in how he had implemented the analysis. Parts of the rhythmic analysis methods implemented are very noisy, meaning they produce results that sometimes can have significant (even huge) errors in relation to a human interpretation of the rhythms being analysed. The fact that the musicians still experienced the analyses as responding intuitively is interesting, and it could be connected to something Mats said later on: “the musicians listen in another way, because they have a direct contact with what is really happening”. So, perhaps, while Tone &CH experienced that some things really made musical sense, Øyvind focused on what didn’t work – which would be easier for him to hear? So how do we understand this, and how is the analysis connected to the sounding result? Andreas pointed out that there is a difference between hearing and analysing: you can learn how the sound behaves and work with that. It might still be difficult to predict exactly what will happen.Tone’s comment here was that you can relate to a certain unpredictability and still have a sort of control over some larger “groups of possible sound results“ that you can relate to as a musician. There is not only an urge to “make sense” (= to understand and “know” the connection) but also an urge to make “aesthetical sense”.

With regards to the experienced complexity, Øyvind also commented that the analysis of a real musical signal is in many ways a gross simplification, and by trying to make sense of the simplification we might actually experience it as more complex. The natural multidimensionality of the experienced sound is lost, due to the singular focus on one extracted feature. We are reinterpreting the sound as something simpler. An example mentioned was the vibrato, which is a complex input and a complex analysis, that could in some analyses be reduced to a simple “more or less” dimension. This issue also relates to the needs of our project to construct new methods of analysis, so that we can try to find analysis dimensions that correspond to some perceptual or experiential features.

Andreas commented “It is quite difficult to really know what is going on without having knowledge of the system and the processes. Even simple mappings can be difficult to grasp only by ear”. Trond reminded us after the meeting about the further complexity that was perhaps not so present in our discussion: we do not improvise with only one parameter “out of control” (adaptive processing). In the cross adaptive situation someone else is processing our own instrument, so we do not have full control over this output, and at the same time we do not have any control over what we are processing, the input (cross- adapting), which in both cases could represent an alienation and perhaps a disconnection from the input-result- relation. And of course the experience of control is also connected to “understanding” the processing analysis you are working with.

The process of interplay :

Øyvind referred to Tone’s experience of a musical “need” during the Trondheim session, expressed: ”I hope he understands…” – when she talked about the processes in the interplay. This was pointing at how you realise during the interplay that you have very clear musical expectations and wishes towards the other performer. This is not in principle different from a lot of other musical improvising situations. Still, because you are dependent on the other’s response in a way that is defining not only the wholeness, but your own part in it, this thought seemed to be more present than is usual in this type of interplay.

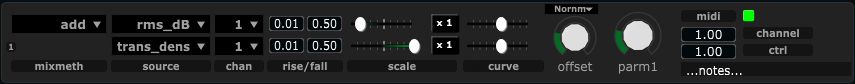

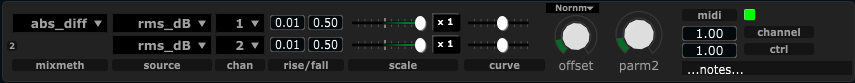

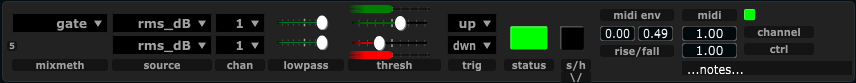

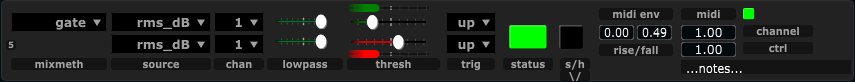

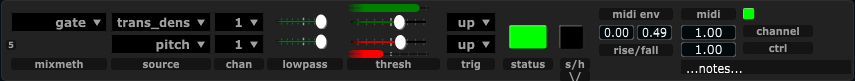

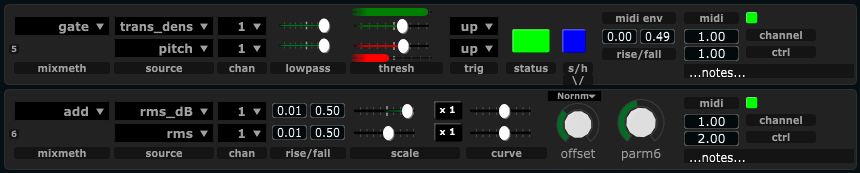

Tools and setup

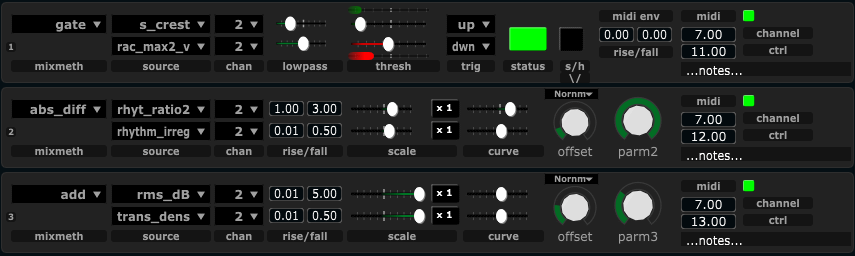

Mats commented that very many of the effects that are used are about room size, and that he felt that this had some – to him – unwanted aesthetical consequences. Øyvind responded that he wanted to start with effects that are easy to control and easy to hear the control of. Delay feedback and reverb size are such effects. Mats also suggested that it was an important aesthetical choice not to have effects all the time, and thereby have the possibility to hear the instrument itself. So to what extent should you be able to choose? We discussed the practical possibilities here: some of the musicians (for example Bjørnar Habbestad) have suggested a foot pedal, where the musician could control the degree to which their actions will inflict changes on the other musician’s sound (or the other way around, control the degree to which other forces can affect his sound). Trond suggested one could also have control over the level of signal/output for the effects, adjusting the balance between processed and unprocessed sound. As Øyvind commented, these types of control could be a pedagogical tool for rehearsing with the effect, turning the processing on and off to understand the mapping better. The tools are of course partly defining the musician’s balance between control, predictability and alienation. Connected to this, we had a short discussion regarding amplified sound in general, that the instrumental sound coming from a speaker located elsewhere in the room in itself could already represent an alienation. Simon referred to the Lawrence Casserley /Evan Parker principle of “each performer’s own processor”, and the situation before the age of the big PA, where the electronic sound could be localised to each musician’s individual output. We discussed possibilities and difficulties with this in a cross adaptive setting: which signal should come out of your speaker? The processed sound of the other, or the result of the other processing you? Or both? and then what would the function be – the placement of the sound is already disturbed.

Rhythm

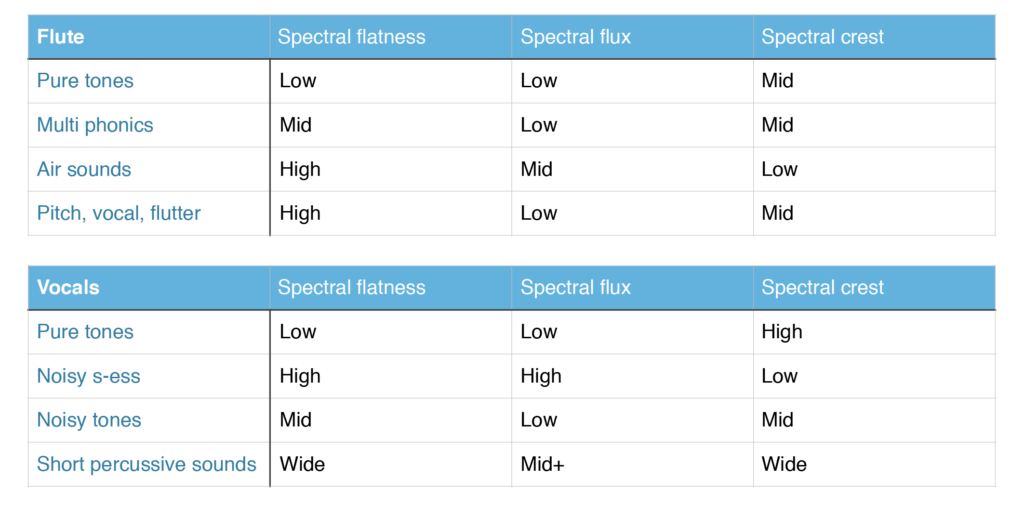

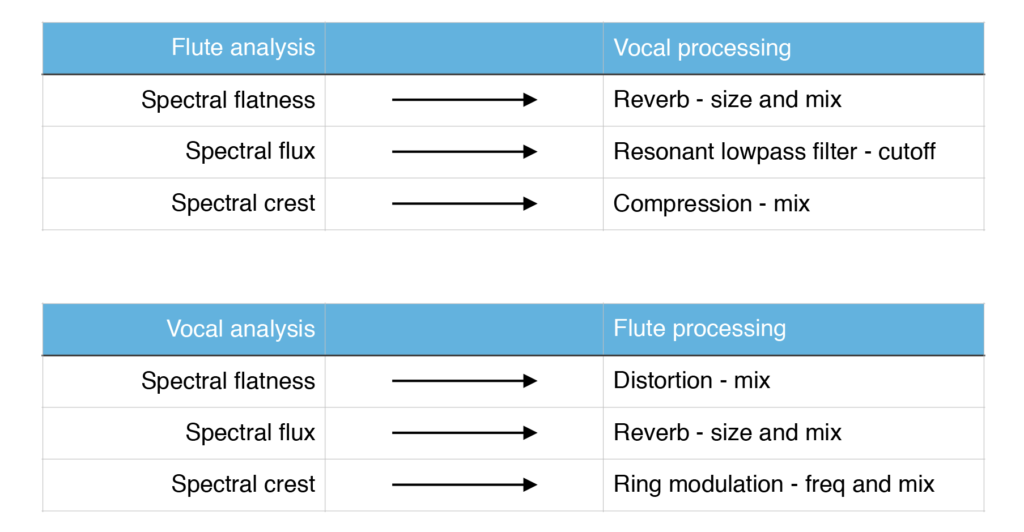

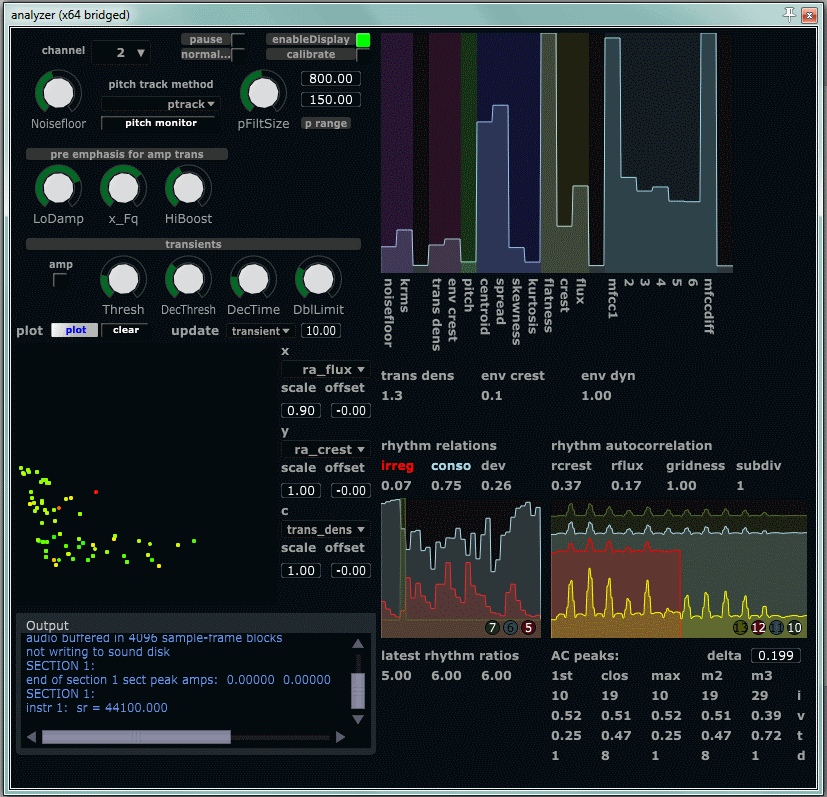

New in this session was the use of the rhythmical analysis. This is very different from all other parameters we have implemented so far. Other analyses relate to the immediate sonic character, but rhythmic analysis tries to extract some temporal features, patterns and behaviours. Since much of the music played in this project is not based on a steady pulse, and even less confined to a regular grid (meter), the traditional methods of rhythmic analysis will not be appropriate. Traditionally one will find the basic pulse, then deduce some form of meter based on the activity, and after this is done one can relate further activity to this pulse and meter. In our rhythmical analysis methods we have tried to avoid the need to first determine pulse and meter, but rather looked into the immediate time relationships between neighbouring events. This creates much less support for any hypothesis the analyser might have about the rhythmical activity, but also allows much greater freedom of variation (stylistically, musically) in the input. Øyvind is really not satisfied with the current status of the rhythmic analysis (even if he is the one mainly responsible for the design), but he was eager to hear how it worked when used by Tone and Carl Haakon. It seems that the live use by real musicians allowed the weaknesses of the analyses to be somewhat covered up. The musicians reported that they felt the system responded quite well (and predictably) to their playing. This indicates that, even if refinements are much needed, the current approach is probably a useful one. One thing that we can say for sure is that some sort of rhythmical analysis is an interesting area of further exploration, and that it can encode some perceptual and experiential features of the musical signal in ways that make sense to the performers. And if it makes sense to the performers, we might guess that it will have the possibility of making sense to the listener as well.

Andreas: How do you define regularity (ex. claves-based musics), how “less regular” is that from a steady beat.

Simon: If you ask a difficult question with a range of possible answers this will be difficult to implement within the project.

As a follow up to the refinement of rhythmic analysis, Øyvind asked: how would *you* analyze rhythm?

Simon: I wouldn’t analyze rhythm for example timeline in African music: a guiding pulse that is not necessarily performed and may exist only in the performer’s head. (This relates directly to Andreas’s next point – Simon later withdrew the idea that he would not analyse rhythm and acknowledged its usefulness in performance practice.)

Andreas: Rhythm is a very complex phenomenon, which involves multiple interconnected temporal levels, often hierarchically organised. Perceptually, we have many ongoing processes involving present, past and anticipations about future events. It might be difficult to emulate such processes in software analysis. Perhaps pattern recognition algorithms can be good for analysing rhythmical features?

Mats: what is rhythm? In our examples: gesture may be more useful than rhythm

Øyvind: rhythm is repeatability, perhaps? Maybe we interpret this in the second after

Simon: no I think we interpret virtually at the same time

Tone: I think of it as a bodily experience first and foremost. (Thinking about this in retrospect, Tone adds: The impulses -when they are experienced as related to each other- creates a movement in the body. I register that through the years (working with non-metric rhythms in free improvisation) there is less need for a periodical set of impulses to start this movement. (But when I look at babies and toddlers, I recognise this bodily reaction to irregular impulses.) I recognice what Andreas says- the movement is trigged by the expectation of more to come. (Think about the difference in your body when you wait for the next impulse to come (anticipations) and when you know that it is over….)

Andreas: When you listen to rhythms, you group events on different levels and relate to what was already played. The grouping is often referred to as «chunking» (psychology). Thus, it works both on an immediate level (now) as well as a more overarching level (bar, subsection, section) because we have to relate what we hear to the earlier. You can simplify or make it complex